CarlaSC

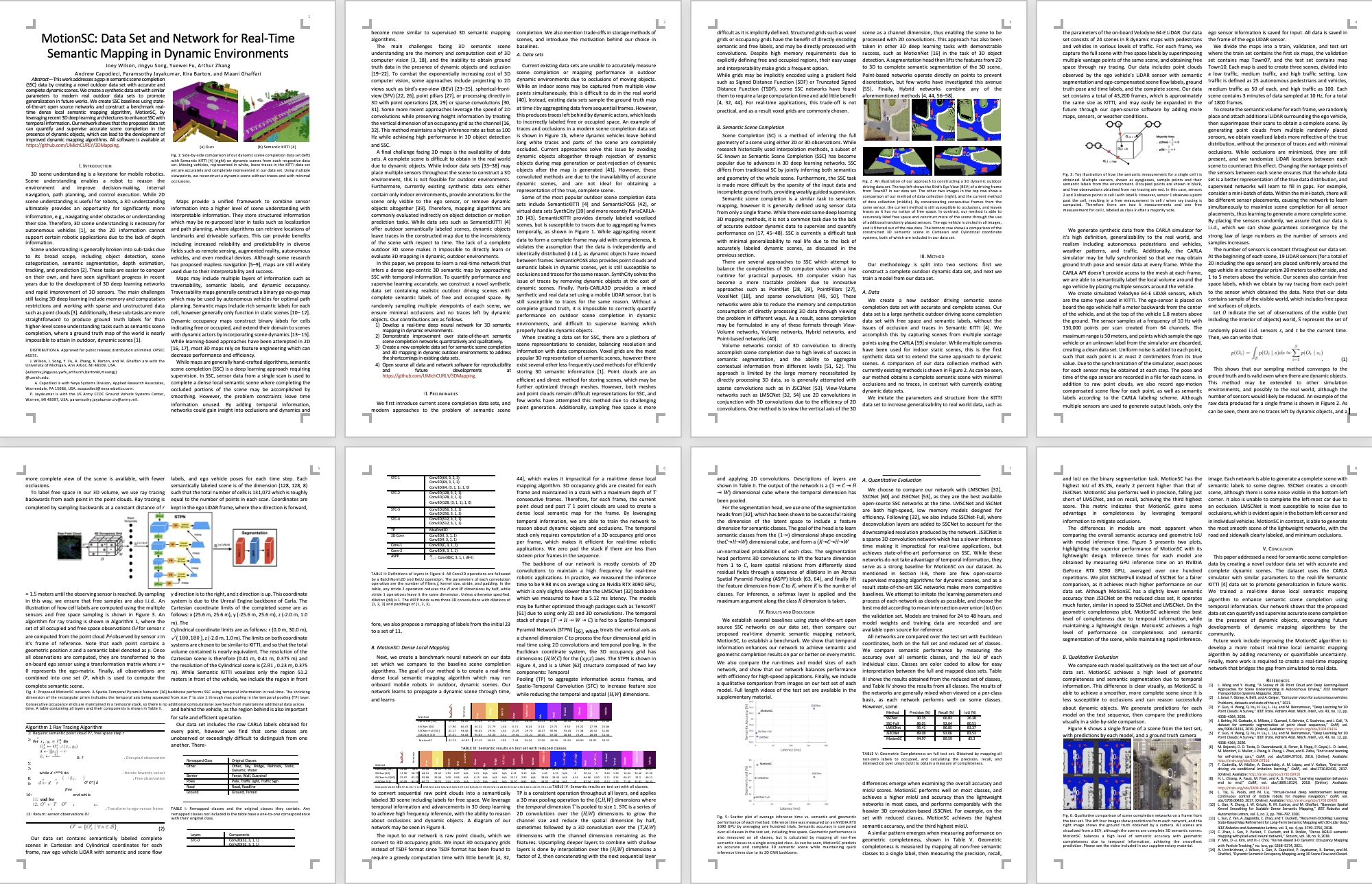

A Data Set (CarlaSC) and Network (MotionSC) for Real-Time Semantic Mapping in Dynamic Environments

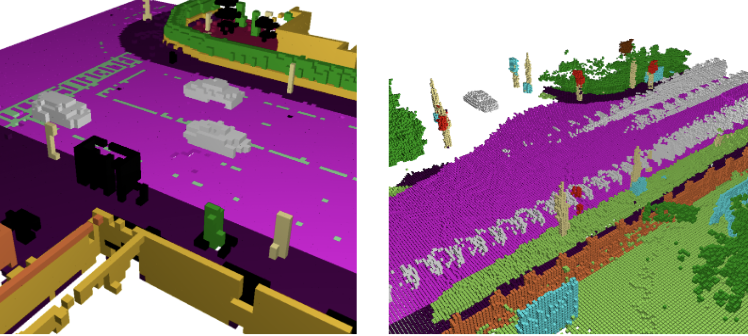

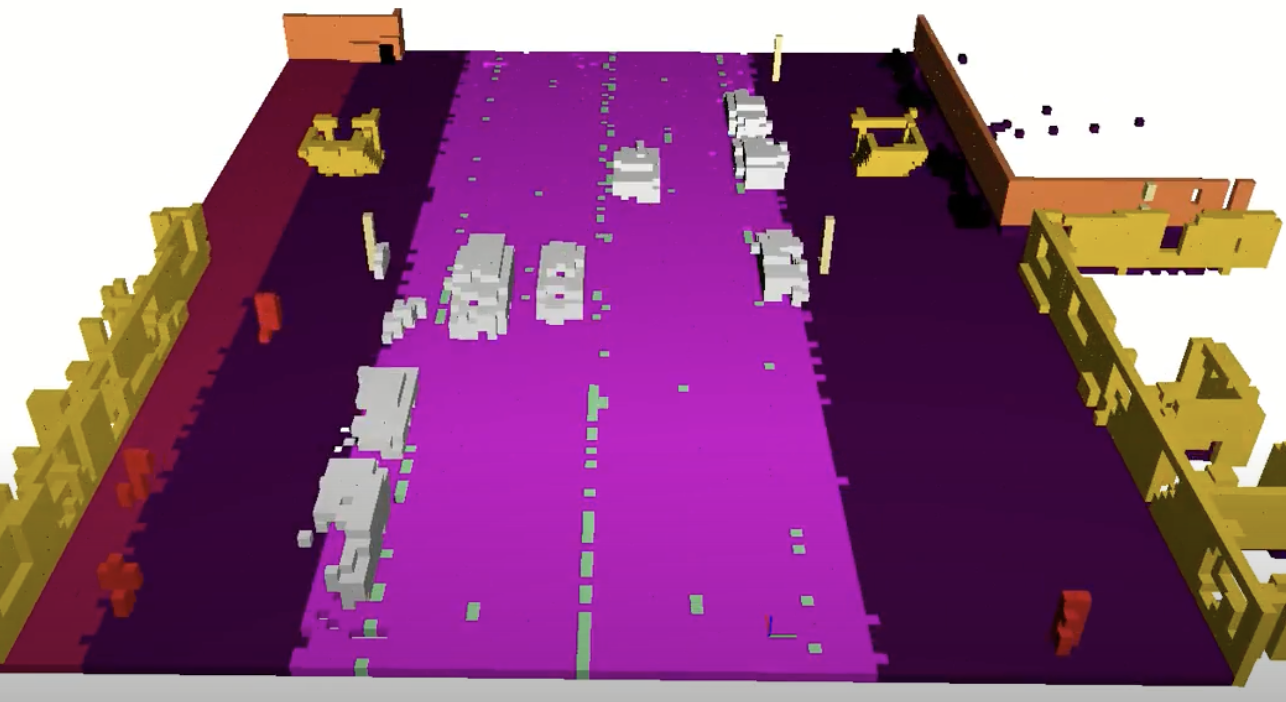

CarlaSC scenes are sampled from multiple viewpoints, ensuring minimal occlusions and no traces left by dynamic objects. The image above showcases MotionSC lack of traces for dynamic objects compared to SemanticKITTI, another well known vision benchmark.

Data is captured at 10Hz and semantic labels along with scene flow data ground truth data is provided for each frame. This provides more information for scene understanding over multiple scans.

CarlaSC is generated using CARLA, an open source simulator for autonomous driving research. This enables high customizability, from the number of dynamic objects to the position and number of sensors.

A description of the dataset and its properties can be found in the Dataset page on this website. To download it, please visit the Download page to download the Cartesian or Cylindrical dataset version. An example of how to use the dataset can be found on the MotionSC Github repository. This repository contains the CarlaSC dataloader used in the MotionSC paper as well as python visualization scripts.

See our paper below for more information and our network baseline results:

If you plan to use our dataset and tools in your work, we would appreciate it if you could cite our paper. (PDF)